By Mehdi Paryavi

The rapid evolution in the areas of information technology, cloud, data center facilities, ioT, big data, cyber security, business sustainability and national security has revealed and highlighted unresolved infrastructure issues, and shortcomings in the practices and the ideological principles of the past.

To this end, key questions have remained unanswered: How do you address the ever-growing challenges with data center cooling, ensure power efficacy, and be redundant in components and paths while eliminating redundant costs? How can we obtain the necessary resilience, remain efficient, and achieve security and safety at the same time? How do we align a staff of platform, network, storage, security, electrical and mechanical engineering professionals to serve a single common goal? How do we deploy application delivery models that actually meet our unique business and application needs, as opposed to making do with “one size fits all” solutions?

The problem has never been about advising individuals how to design or operate electrical or mechanical infrastructure, nor has it ever been about configuring switches, routers and storage devices. The problem has always been about making people understand how to design, deploy and operate such infrastructure cohesively to facilitate data and application delivery according to business requirements. Therefore, developing a collective state of mind whereby all the practices and resources are driven by common business requirements is fundamental to necessities of the modern era.

According to common industry guidelines, the design requirements for the data center facilities of factories, airports, and military bases are the same. However, it is easy to recognize that each of these institutions have vastly different missions, and therefore operational requirements. yet the prevailing guidance is so blind to this, as to dictate that high availability cannot be achieved for any one of these data centers if it is located too close to the others. Obviously, we need mission specific guidance that factors in the unique operational requirements of industries and organizations.

Further, organizations famously suffer from a disconnect between IT and facilities, often resulting in critical and costly misalignments. They also have a lack of any clear guidance on how the use of cloud providers or cloud technologies impacts their overall application performance.

These challenges are compounded by a workforce that too often cleaves to “the way we have always done it,”or conversely, reflexively adopts technologies simply because they are new and buzzworthy. There is a measurable shortage of skilled workers that understand both the operational disciplines and technical advancements that must combine to propel an organization forward in pursuit of a focused objective. This shortage is aggravated by the speed of technological evolution, making it very difficult for organizations to keep their staff trained and up to date, while simultaneously maintaining the rigors of daily operations.

How do we address the dichotomies we are all faced with – operations vs. infrastructure, distribution vs. consolidation, Capex vs. opex, energy efficient vs. resilience, security vs. complexity, and more, all while staying in budget? How do we address the non-technical trends that may impact our organization, such as legislation and governance, geopolitics, climate or sustainability trends, and others?

There is a vivid need for a universal language and understanding amongst all stakeholders in the data center industry. This need can present itself in the fine details, such as a lack of an industry wide color coding scheme for cabling and piping, resulting in the increased probability of human error related outages as workers go from one company to another. it can also present itself on the broader strategic level, where service providers and their customers have no common basis for quantifying and qualifying performance requirements and capabilities.

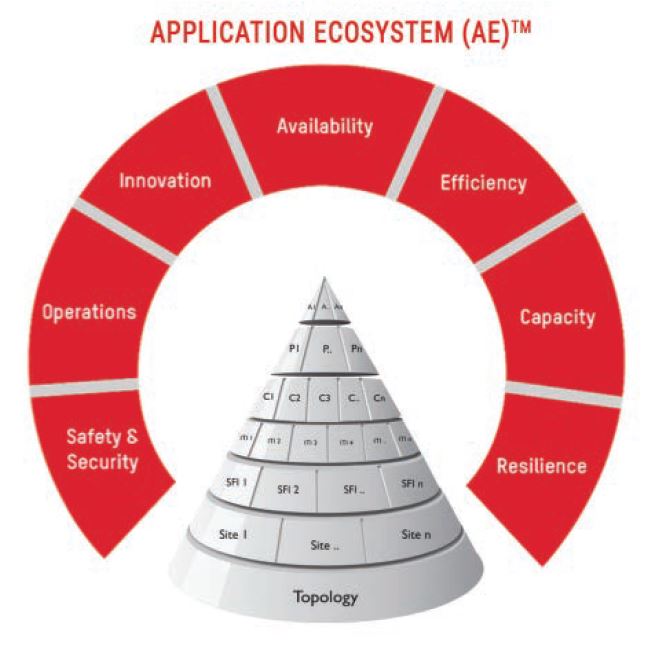

The Infinity Paradigm® is the model to reduce business risk, and optimize & align performance with defined business requirements. It is a concept of visualizing and analyzing an entire information technology system as a holistic and dynamic model, in a manner that qualitatively and quantitatively illustrates the myriad of interdependencies of its various supporting components. This model is called the application ecosystem™, and it is comprised of various physical layers of technical infrastructure as well as some conceptual or logical layers that are derived from those physical layers.

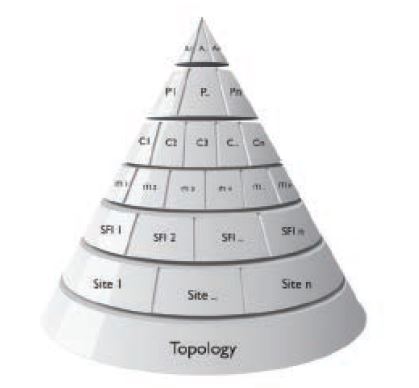

These layers are commonly depicted in a pyramid fashion to illustrate the concept of the interdependent infrastructure stack. The layers include the Topology layer at the foundation, the site layer, then the Site Facilities Infrastructure (SFI) layer, the Information Technology Infrastructure (ITI) layer, the Compute (C) layer, The Platform (P) layer, and finally the Application (A) layer.

Each layer, except for the Topology layer, can be comprised of multiple discreet instances of infrastructure working in concert to support the layers above them. These instances may have a one to one, one to many, or many to many relationship with the instances of infrastructure in the layers below it.

A set of software services that fulfill organizational requirements. As an example, an ERP application is composed of accounting, payroll, inventory, and asset management software services.

The methodology by which the application is delivered. Common Delivery Platforms are Business as a Service (BAAS), Software as a Service (SAAS), Platform as a Service (PAAS), Infrastructure as a Service (IAAS) and Nothing as a Service (Naas).

Compute is a logical layer where processing requirements of the application are defined in an abstract form. The compute cloud would subsequently map to actual virtual or physical cores in the IT infrastructure layer.

A set of instances of network infrastructure, physical servers, data storage, and other IT infrastructure utilized to support application delivery.

The collection of facilities infrastructure components that support a Data Center Node. The SFI layer specifies the power, cooling, and other infrastructure and related services that a data center node requires to meet business requirements.

An independently operated space to house site facilities infrastructure. This includes land, building, utilities, civil infrastructure, etc.

Topology specifies the physical locations, interconnectivity and interrelation of data center nodes (Data Center sites map).

![]()

The method of performance classification within the various application ecosystem layers is through Grade Levels, or Gs. Gs range from G4 to G0, with G4 representing the maximum allowable level of operational vulnerabilities, while G0 essentially mandates total elimination of all such vulnerabilities. Thus, it represents a comprehensive grasp of excellence in the key efficacies.

To understand the interdependencies of the AE layers, and to assess their performance in support of the AE business requirements, various common criteria have been defined for which design guidance is provided, and measurements and calculations can be performed. These criteria are grouped into categories called “efficacies,” and these efficacies are common across all layers. Efficacies include Availability, Capacity, Operations, Safety and Security, Efficiency, and Innovation. Thus, a matrix is created by this conceptual construct, and this matrix is the heart of this standards framework. These metrics and calculations are used to derive an Efficacy Score Rating (ESR®) for each efficacy.

The efficacy score rating is the most reliable scoring mechanism for evaluating and comparing the performance of a complex system. It is the product of an algorithmic evaluation of the results of each layer’s G grade performance as well as the results of each efficacy’s performance across layers. This scoring methodology calculates the inherent effect of interdependencies and independencies across and between layers and efficacies, and applies weightings to functions that are more central to supporting the fundamental business requirements.

The efficacy score rating is the most reliable scoring mechanism for evaluating and comparing the performance of a complex system. It is the product of an algorithmic evaluation of the results of each layer’s G grade performance as well as the results of each efficacy’s performance across layers. This scoring methodology calculates the inherent effect of interdependencies and independencies across and between layers and efficacies, and applies weightings to functions that are more central to supporting the fundamental business requirements.

The ultimate purpose of evaluating the layers and efficacies is to be able to produce an ESR® for the total system. This score provides a simple, straightforward, quantitative output – a single number – that represents the Application Ecosystem’s ability to reliably and sustainably meet business requirements. No other existing KPI provides such a simple, comprehensively

informed view of a system as complex as an Application Ecosystem.

Comprehensive & Cloud-inclusive

The Infinity Paradigm® was founded on the basis of bridging existing industry gaps. It enforces a comprehensive view of the data center, its investment, design, people, assessment, and overall management. Virtualization is the essence of the modern data center. The Infinity Paradigm® is the pioneer of introducing cloud and virtualized services as a key factor of a next-generation standards framework.

Efficiency-Driven and Operations Conducive

Efficiency and operations are foundational elements of the Infinity Paradigm®. Our industry has favored availability and resilience over efficiency and operational effectiveness for far too long. IDCA’s philosophy dictates that a data center’s availability, capacity, security, safety, compliance, etc. cannot be measured unless its operational requirements are fully evaluated and met. Only a demonstrable operational competency with each component’s individual function and the interdependencies between components will fulfill infinity paradigm’s requirements of compliance.

International, Yet Localized

The Infinity Paradigm® was designed to bring the world’s best and brightest together, allowing all cloud, application, information technology, facility and data center stakeholders to enjoy the synergy that global collaboration can provide. It is extensible and adaptable to localized technical, language, environmental, regulatory, political, and industrial requirements and capabilities. This unprecedented level of “reality-centric” customization, allows for ease of access, enhanced efficiency, compliance and effectiveness.

Effective & Application-Centric

One of the Infinity Paradigm’s core missions is assurance of both practicality and effectiveness. Its framework matrix makes a practical, intuitive, detailed and holistic understanding of the entire ecosystem possible. as the first organization to acknowledge and promote the purpose of data centers to be application delivery, IDCA has effectively redefined the data center as ”an infrastructure supporting the Application Ecosystem (AE).” This new, application-centric approach eliminates redundant and goalless efforts, honing in on a data center’s true purpose, enabling stakeholders to set accurate expectations and properly align plans towards satisfying their application needs.

Vendor Neutrality

IDCA does not solicit or accept input from technology manufacturers, and therefore the standards framework is not biased toward or against any particular technologies, or technical solutions.

Open Community Effort

The Infinity Paradigm® is a free, open data center framework available worldwide. It empowers functional performance on a global scale, while adapting to local requirements. It provides the stakeholder with a customizable data center framework granting total control over Availability, Efficiency, Security, Capacity, Operation, Innovation and Resilience based on the

specific needs of the business.

The Infinity Paradigm® bridges existing industry gaps by providing a comprehensive open standards framework capable of handling the complexity of the post-legacy data center age. it maintains a focus on certain unique core values: comprehensiveness, effectiveness, practicality, and adaptability. This framework will grow and evolve over time through the input and collaboration of its users around the world, continuously redefining the very essence of what a Data Center is and does. it integrates legacy standards and guidelines, remaining fully aware of their strengths and limitations, in a forward looking and practical way. as a result, the infinity paradigm® provides data center designers, planners, builders, operators and end-users with a holistic, constructive approach, addressing the problems of today and challenges of tomorrow.

Mehdi Paryavi is Chairman of the International Data Center Authority at DCA®. He can be reached at chairman@idc-a.org.