By Steve Madara

The underlying trend behind the need for density in the data center today is the expanded use of processing-intensive applications, most notably artificial intelligence. Like current HPC deployments, these applications require the ability to process massive amounts of data with extremely low latency. But unlike HPC, that data isn’t just in the form of text and numbers. AI applications often process data from heterogenous sources in multiple forms, including large image, audio, and video files. Entering 2020, 73% of AI applications were working with image, video, audio, or sensor data.

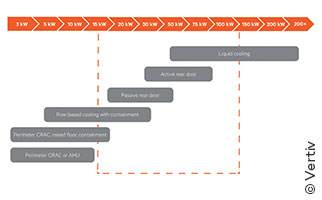

Chip manufacturers have responded to the growing demand for multi-format AI. Thermal power densities for leading CPUs and GPUs rose sharply in the last two years, after relatively modest growth in the previous five years. Intel’s Cannon Lake CPUs introduced in 2020, for example, had thermal power densities double that of the previous generation introduced just two years earlier. With more of these high-powered CPUs and GPUs being packed into 1U servers, and equipment racks being packed with these 1U servers, we are seeing a growing number of applications with racks densities of 30 kW or higher. Once rack densities rise above that level, air cooling reaches the limit of its ability to deliver effective cooling.

Liquid Cooling Technologies

Like air cooling, liquid cooling is available in a variety of configurations that use different technologies, including rear door heat exchangers, direct-to-chip cooling and immersion cooling.

Rear-door heat exchangers are a mature technology that doesn’t bring liquid directly to the server but does utilize the high thermal transfer properties of liquid. In a passive rear-door heat exchanger, a liquid-filled coil is installed in place of the rear door of the rack, and as server fans move heated air through the rack, the coil absorbs the heat before the air enters the data center. In an active design, fans integrated into the unit pull air through the coils to increase unit capacity.

In direct-to-chip liquid cooling, cold plates sit atop a server’s main heat-generating components to draw off heat through a single phase or two-phase process. Single-phase cold plates use a cooling fluid looped into the cold plate to absorb heat from server components. In the two-phase process, a low-pressure dielectric liquid flows into evaporators, and the heat generated by server components boils the fluid. The heat is released from the evaporator as vapor and transferred outside the rack for heat rejection.

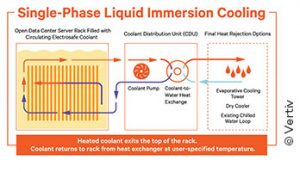

With immersion cooling, servers and other components in the rack are submerged in a thermally conductive dielectric liquid or fluid. In a single-phase immersion system, heat is transferred to the coolant through direct contact with server components and removed by heat exchangers outside the immersion tank. In two-phase immersion cooling, the dielectric fluid is engineered to have a specific boiling point that protects IT equipment but enables efficient heat removal. Heat from the servers changes the phase of the fluid, and the rising vapor is condensed back to liquid by coils located at the top of the tank.

Deployment Considerations

Each of these technologies have specific advantages that may make them right for a certain application, but also come with considerations that must be factored into planning and deployment, particularly when integrating liquid-cooled racks into an air-cooled data center.

For example, rear-door heat exchangers dispel cooled air into the data center, and air-cooling systems should have the capacity to handle increased heat loads if one or more rear door exchangers is being serviced. Similarly, direct to chip cooling systems require air cooling support, as their application to specific components generally only captures about 75% of the heat generated by the rack. In a 30 kW rack, that could leave 7.5 kW of heat load that must be managed by air cooling systems. Fluids used in these systems must also be carefully selected to properly balance thermal capture and viscosity to achieve desired capacity while optimizing pumping efficiency.

Immersion systems can operate without the support of air cooling and have the added benefit of eliminating the energy draw of server fans, but the fluids used in two-phase immersion systems remain expensive and have environmental, health and safety concerns that require systems to be designed to minimize vapor loss.

While deployment of liquid cooling is more complex than air cooling, the numerous benefits liquid cooling delivers in high-density applications are driving increased adoption, as operators seek out partners that can help them navigate these challenges. The most important of those benefits is the reliability and performance liquid cooling provides to high-density racks. As CPU case temperatures approach the maximum safe operating temperature, as is likely to occur when using air to cool high-density racks, CPU performance is throttled back to avoid thermal runaway, limiting application performance. Liquid cooling allows densely packed systems to operate continuously at their maximum voltage and clock frequency without overheating. Liquid cooling also enables improved energy efficiency, better space utilization and reduces data center noise levels.

Liquid Cooling Infrastructure

The introduction of liquid cooling into a data center relying exclusively on air cooling for IT equipment can be a challenge. Rear-door heat exchangers represent an easy first step into liquid cooling if the problem is limited to zones of high-density air-cooled IT gear. As direct-to chip servers are introduced, there are simple liquid-to-air cooling distribution units (CDUs) to ease the transition.

Those CDUs are an example of how liquid cooling infrastructure is advancing to streamline 0deployment, support higher capacities with fewer components, and minimize risk.

CDUs form the foundation of the secondary cooling loop that delivers fluid to liquid cooling systems and removes heat from the fluid being used. Today’s CDUs enable strict containment and precise control of the liquid cooling system and can maintain liquid cooling supply temperature above the data center dew point to prevent condensation and maximize sensible cooling. They can be deployed at a rack level to support individual racks, in row or on the perimeter of the data center, and are available in capacities exceeding 1 MW to minimize the number of CDUs required in large deployments.

Another new development is the introduction of indoor chillers designed to support liquid cooling. For applications that don’t have access to chilled water in the data center or prefer not to tap into the existing chilled water system, indoor chillers provide an easy to deploy solution with free cooling capability. Variable speed pumps allow the flow of refrigerant to adapt to changing loads, and internal controls maintain the leaving water temperature by controlling the speed of the pump. Some units even use the same footprint as perimeter cooling units to simplify retrofits and future proof new data center designs.

Considerable progress has also been made in risk mitigation. The liquid-cooling systems being deployed today minimize this risk of bringing liquid to the rack by limiting the volume of fluids being distributed and integrating leak detection technology into system components and at key locations across the piping system. The Open Compute Project has released an excellent paper on leak detection technologies and strategies for liquid cooling applications.

Taking the Next Step

There is a lot to consider when deploying liquid cooling in the data center today. Everything from heat capture (technology and fluids) to distribution to heat rejection must be carefully engineered to minimize disruption to operations and balance the capacity and performance of air and liquid cooling systems working together in a hybrid environment. But the technologies, best practices and expertise are available to successfully navigate these challenges and introduce high-density, liquid cooled racks into a wide range of data center environments. For a deeper dive into liquid cooling, see the white paper, Understanding Data Center Liquid Cooling Options and Infrastructure Requirements.

Steve Madara is VP of Global Data Center Thermal Management, at Vertiv. He can be reached at [email protected].