8

continued to build out Prineville

and started construction on our

future sites in Forest City, North

Carolina; Luleå, Sweden; and

Altoona, Iowa. But in the same

spirit of hacking and iterating

that Facebook brings to its

product development, our teams

are always looking for ways to

carve out new efficiency gains.

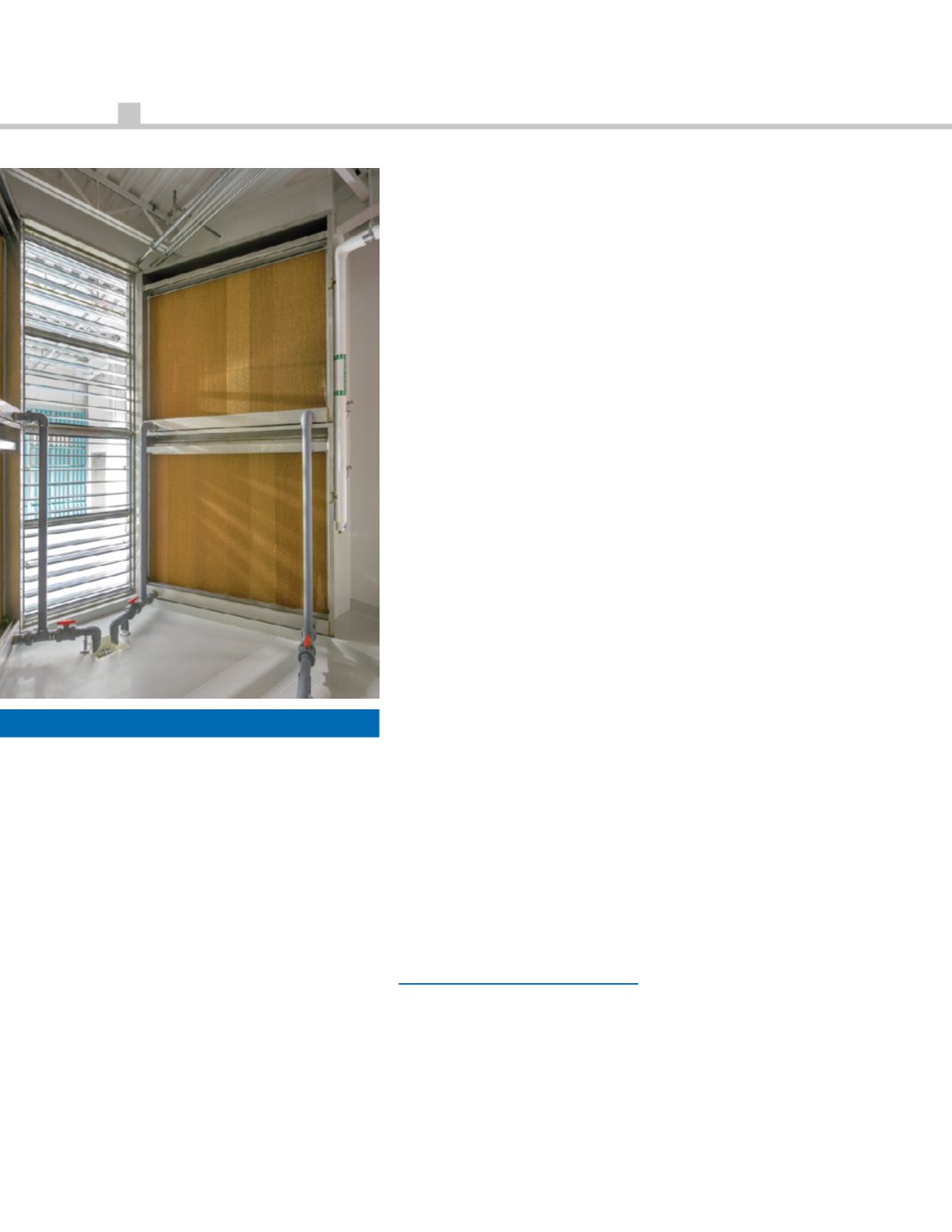

One small example can be found

in how we evolved the

evaporative elements of our

cooling system. In our first

building in Prineville, we built a

wall of super-fine misters into

one of the stages of the air

handling process in the

penthouse. This system was

effective, but it required that the

water be treated through a

reverse-osmosis system to ensure

that the misting nozzles don’t

clog over time. For Building 2,

we replaced the wall of misters

with a wall of evaporative media

that we can soak with water

directly from the well we have on

site. Not only did this design

iteration allow us to remove the

RO system from future buildings,

but it also allowed us to lower

our water consumption by an

order of magnitude. Our water

usage efficiency (WUE) over the

last 12 months in Prineville is

.028 L/kWh.

In many ways, this example of

iterating from one data center

building to another illustrates the

ethos that pervades how we

approach data center design:

strive for simplicity and

efficiency; focus on speed and

flexibility; and be open and

collaborative. This approach is at

the core of everything we do,

and its impact is undeniable: Our

drive for greater data center

efficiency has played a big role in

helping Facebook save more

than $1.2 billion in infrastructure

costs over the last three years.

Simplicity Breeds

Efficiency

Steve Jobs once said, “Simple

can be harder than complex: You

have to work hard to get your

thinking clean to make it simple.

But it’s worth it in the end

because once you get there, you

can move mountains.” Simplicity

is at the core of our data center

design philosophy, in no small

part because it’s crucial to

efficiently managing the

hundreds of thousands of servers

in our fleet.

A peek into a Facebook data

center will show what hardware

we deploy, but it’s also important

to see what’s not there. From the

beginning, our hardware teams

have practiced a “vanity-free”

approach to design, including

only the features that are

absolutely required to the

function of the device. This

approach has led to a number of

“common sense” decisions, such

as removing the plastic bezels

from the front of our servers to

allow the fans at the back of the

servers to draw air across the

components without expending

as much energy.

It’s also led us to designs that are

more innovative. Our custom-

designed “Knox” storage server

is comprised of a storage disk

and a compute server from our

“Winterfell” web server. By

moving to our new “Honey

Badger” micro server module in

Knox, we eliminate need for

discrete compute servers. In

addition to reducing the power

draw and lowering cost on a per-

server basis, we are able to

increase our rack density from

nine to 15 servers per rack,

further improving our total cost

of ownership.

Removing a little bit of plastic or

opening up a little more room in

our racks might not seem like it

would make much of a

7X24 MAGAZINE FALL 2014